Validity is one of the basic criteria in psychodiagnostics of tests and techniques that determines their quality, close to the concept of reliability. It is used when you need to find out how well a technique measures exactly what it is aimed at; accordingly, the better the quality under study is displayed, the greater the validity of this technique.

The question of validity arises first in the process of developing the material, then after applying a test or technique, if it is necessary to find out whether the degree of expression of the identified personality characteristic corresponds to the method for measuring this property.

The concept of validity is expressed by the correlation of the results obtained as a result of applying a test or technique with other characteristics that are also studied, and it can also be argued comprehensively, using different techniques and criteria. Different types of validity are used: conceptual, constructive, criterion, content validity, with specific methods for establishing their degree of reliability. Sometimes the criterion of reliability is a mandatory requirement for checking psychodiagnostic methods if they are in doubt.

For psychological research to have real value, it must not only be valid, but also reliable at the same time. Reliability allows the experimenter to be confident that the value being studied is very close to the true value. And a valid criterion is important because it indicates that what is being studied is exactly what the experimenter intends. It is important to note that this criterion may imply reliability, but reliability cannot imply validity. Reliable values may not be valid, but valid ones must be reliable, this is the whole essence of successful research and testing.

Validity is in psychology

In psychology, the concept of validity refers to the experimenter’s confidence that he measured exactly what he wanted using a certain technique, and shows the degree of consistency between the results and the technique itself relative to the tasks set. A valid measurement is one that measures exactly what it was designed to measure. For example, a technique aimed at determining temperament should measure precisely temperament, and not something else.

Validity in experimental psychology is a very important aspect, it is an important indicator that ensures the reliability of the results, and sometimes the most problems arise with it. A perfect experiment must have impeccable validity, that is, it must demonstrate that the experimental effect is caused by modifications of the independent variable and must be completely consistent with reality. The results obtained can be generalized without restrictions. If we are talking about the degree of this criterion, then it is assumed that the results will correspond to the objectives.

Validity testing is carried out in three ways.

Content validity assessment is carried out to find out the level of correspondence between the methodology used and the reality in which the property under study is expressed in the methodology. There is also such a component as obvious, also called face validity, it characterizes the degree of compliance of the test with the expectations of those being assessed. In most methodologies, it is considered very important that the assessment participant sees an obvious connection between the content of the assessment procedure and the reality of the assessment object.

Construct validity assessment is performed to obtain the degree of validity that the test actually measures those constructs that are specified and scientifically valid.

There are two dimensions to construct validity. The first is called convergent validation, which checks the expected relationship of the results of a technique with characteristics from other techniques that measure the original properties. If several methods are needed to measure some characteristic, then a rational solution would be to conduct experiments with at least two methods, so that when comparing the results, finding a high positive correlation, one can claim a valid criterion.

Convergent validation determines the likelihood that a test score will vary with expectations. The second approach is called discriminant validation, which means that the technique should not measure any characteristics with which theoretically there should be no correlation.

Validity testing can also be criterion-based; it, guided by statistical methods, determines the degree of compliance of the results with predetermined external criteria. Such criteria can be: direct measures, methods independent of the results, or the value of social and organizational significant performance indicators. Criterion validity also includes predictive validity; it is used when there is a need to predict behavior. And if it turns out that this forecast is realized over time, then the technique is predictively valid.

Reliability factors

The degree of reliability can be affected by a number of negative factors, the most significant of which are the following:

- imperfection of the methodology (incorrect or inaccurate instructions, unclear wording of tasks);

- temporary instability or constant fluctuations in the values of the indicator that is being studied;

- inadequacy of the environment in which initial and follow-up studies are conducted;

- the changing behavior of the researcher, as well as the instability of the subject’s condition;

- subjective approach when assessing test results.

The validity of the test is

A test is a standardized task, as a result of its application, data is obtained about the psychophysiological state of a person and his personal properties, his knowledge, abilities and skills.

Validity and reliability of tests are two indicators that determine their quality.

The validity of the test determines the degree of correspondence of the quality, characteristic, or psychological property being studied to the test by which they are determined.

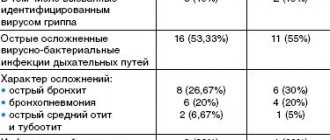

The validity of a test is an indicator of its effectiveness and applicability to the measurement of the required characteristic. The highest quality tests have 80% validity. When validating, it should be taken into account that the quality of the results will depend on the number of subjects and their characteristics. It turns out that one test can be either highly reliable or completely invalid.

There are several approaches to determining the validity of a test.

When measuring a complex psychological phenomenon that has a hierarchical structure and cannot be studied using just one test, construct validity is used. It determines the accuracy of the study of complex, structured psychological phenomena and personality traits measured through testing.

Criterion-based validity is a test criterion that determines the psychological phenomenon under study at the present moment and predicts the characteristics of this phenomenon in the future. To do this, the results obtained during testing are correlated with the degree of development of the quality being measured in practice, assessing specific abilities in a certain activity. If the validity of the test has a value of at least 0.2, then the use of such a test is justified.

Content validity is a criterion of a test that is used to determine the compliance of the scope of its measured psychological constructs and demonstrates the completeness of the set of measured indicators.

Predictive validity is a criterion by which one can predict the nature of the development of the quality under study in the future. This criterion for test quality is very valuable when viewed from a practical point of view, but there may be difficulties, since the uneven development of this quality in different people is excluded.

Test reliability is a test criterion that measures the level of consistency of test results across repeated studies. It is determined by secondary testing after a certain amount of time and calculating the correlation coefficient of the results obtained after the first and after the second testing. It is also important to take into account the peculiarities of the test procedure itself and the socio-psychological structure of the sample. The same test can have different reliability, depending on the gender, age, and social status of the subjects. Therefore, reliability can sometimes have inaccuracies and errors that arise from the research process itself, so ways are being sought to reduce the influence of certain factors on testing. It can be stated that the test is reliable if it is 0.8-0.9.

The validity and reliability of tests are very important because they define the test as a measuring instrument. When reliability and validity are unknown, the test is considered unsuitable for use.

There is also an ethical context in measuring reliability and validity. This is especially important when test results have implications for people's life-saving decisions. Some people are hired, others are eliminated, some students go to educational institutions, while others must finish their studies first, some are given a psychiatric diagnosis and treatment, while others are healthy - this all suggests that such decisions are made on the basis studying assessment of behavior or special abilities. For example, a person looking for a job must take a test, and his scores are the decisive indicators when applying for a job, and finds out that the test was not valid and reliable enough, he will be very disappointed.

What is reliability

During test reliability testing, the consistency of the results obtained when the test is repeated is assessed. Data discrepancies should be absent or insignificant. Otherwise, it is impossible to treat the test results with confidence. Test reliability is a criterion that indicates the accuracy of measurements. The following test properties are considered essential:

- reproducibility of the results obtained from the study;

- the degree of accuracy of the measurement technique or related instruments;

- sustainability of results over a certain period of time.

In the interpretation of reliability, the following main components can be distinguished:

- the reliability of the measuring instrument (namely the literacy and objectivity of the test task), which can be assessed by calculating the corresponding coefficient;

- the stability of the characteristic being studied over a long period of time, as well as the predictability and smoothness of its fluctuations;

- objectivity of the result (that is, its independence from the personal preferences of the researcher).

The validity of the methodology is

The validity of a technique determines the correspondence of what is studied by this technique to what exactly it is intended to study.

For example, if a psychological technique that is based on informed self-report is assigned to study a certain personality quality, a quality that cannot be truly assessed by the person himself, then such a technique will not be valid.

In most cases, the answers that the subject gives to questions about the presence or absence of development of this quality in him can express how the subject himself perceives himself, or how he would like to be in the eyes of other people.

Validity is also a basic requirement for psychological methods for studying psychological constructs. There are many different types of this criterion, and there is no single opinion yet on how to correctly name these types and it is not known which specific types the technique must comply with. If the technique turns out to be invalid externally or internally, it is not recommended to use it. There are two approaches to method validation.

The theoretical approach is revealed in showing how truly the methodology measures exactly the quality that the researcher came up with and is obliged to measure. This is proven through compilation with related indicators and those where connections could not exist. Therefore, to confirm a theoretically valid criterion, it is necessary to determine the degree of connections with a related technique, meaning a convergent criterion and the absence of such a connection with techniques that have a different theoretical basis (discriminant validity).

Assessing the validity of a technique can be quantitative or qualitative. The pragmatic approach evaluates the effectiveness and practical significance of the technique, and for its implementation an independent external criterion is used, as an indicator of the occurrence of this quality in everyday life. Such a criterion, for example, can be academic performance (for achievement methods, intelligence tests), subjective assessments (for personal methods), specific abilities, drawing, modeling (for special characteristics methods).

To prove the validity of external criteria, four types are distinguished: performance criteria - these are criteria such as the number of tasks completed, time spent on training; subjective criteria are obtained along with questionnaires, interviews or questionnaires; physiological – heart rate, blood pressure, physical symptoms; criteria of chance - are used when the goal is related or influenced by a certain case or circumstances.

When choosing a research methodology, it is of theoretical and practical importance to determine the scope of the characteristics being studied, as an important component of validity. The information contained in the name of the technique is almost always not sufficient to judge the scope of its application. This is just the name of the technique, but there is always a lot more hidden under it. A good example would be the proofreading technique. Here, the scope of properties being studied includes concentration, stability and psychomotor speed of processes. This technique provides an assessment of the severity of these qualities in a person, correlates well with values obtained from other methods and has good validity. At the same time, the values obtained as a result of the correction test are subject to a greater influence of other factors, regarding which the technique will be nonspecific. If you use a proof test to measure them, the validity will be low. It turns out that by determining the scope of application of the methodology, a valid criterion reflects the level of validity of the research results. With a small number of accompanying factors that influence the results, the reliability of the estimates obtained in the methodology will be higher. The reliability of the results is also determined using a set of measured properties, their importance in diagnosing complex activities, and the importance of displaying the methodology of the subject of measurement in the material. For example, to meet the requirements of validity and reliability, the methodology assigned for professional selection must analyze a large range of different indicators that are most important in achieving success in the profession.

Basic criteria requirements

External criteria that influence the test validity indicator must meet the following basic requirements:

- compliance with the particular area in which the research is being conducted, relevance, as well as semantic connection with the diagnostic model;

- the absence of any interference or sharp breaks in the sample (the point is that all participants in the experiment must meet pre-established parameters and be in similar conditions);

- the parameter under study must be reliable, constant and not subject to sudden changes.

conclusions

Test validity and reliability are complementary indicators that provide the most complete assessment of the fairness and significance of research results. Often they are determined simultaneously.

Reliability shows how much the test results can be trusted. This means their constancy every time a similar test is repeated with the same participants. A low degree of reliability may indicate intentional distortion or an irresponsible approach.

The concept of test validity is associated with the qualitative side of the experiment. We are talking about whether the chosen tool corresponds to the assessment of a particular psychological phenomenon. Here, both qualitative indicators (theoretical assessment) and quantitative indicators (calculation of the corresponding coefficients) can be used.

[Edit] Reliability as internal consistency

Internal consistency is determined by the connection of each specific test element with the overall result, the extent to which each element conflicts with the others, and the extent to which each individual question measures the characteristic that the entire test is aimed at. Most often, tests are designed in such a way that they have a high degree of internal consistency, and due to the fact that if one variable is measured by part of the test, then in other parts, if they are not consistent with the first, the same variable cannot be measured. Thus, for a test to be valid, it must be consistent.

However, there is also an opposite point of view. Cattell suggests that high internal consistency is actually the opposite of validity: each question should cover a smaller area or have a narrower meaning than the criterion being measured. If all items are highly consistent, they are highly correlated, and therefore a reliable test will measure only a relatively narrow variable with small variances. According to Cattell's reasoning, maximum validity exists when all test items are uncorrelated with each other, and each of them has a positive correlation with the criterion. However, such a test will have low internal consistency reliability.

To check internal consistency the following are used:

- Splitting method or autonomous parts method

- Equivalent forms method

- Cronbach's alpha

[Edit] Cronbach's value

Cronbach's alpha will generally increase as intercorrelations between variables increase, and are therefore considered a marker of internal consistency in assessing test scores. Since maximum intercorrelations between variables across all items are present when the same thing is being measured, Cronbach's alpha indirectly indicates the extent to which all items are measuring the same thing. Thus, alpha is most appropriate to use when all items are aimed at measuring the same phenomenon, property, phenomenon. However, it should be noted that a high coefficient value indicates that a set of items has a common basis, but does not indicate that there is a single factor behind them - the unidimensionality of the scale should be confirmed by additional methods. When a heterogeneous structure is measured, Cronbach's alpha will often be low. Thus, alpha is not suitable for assessing the reliability of intentionally heterogeneous instruments (for example, for the original MMPI, in which case it makes sense to conduct separate measures for each scale).

Professionally developed tests are expected to have an internal consistency of at least 0.90.

The alpha coefficient can also be used to solve other types of problems. Thus, it can be used to measure the degree of agreement between experts assessing a particular object, the stability of data during repeated measurements, etc.